Leaning Forward: How Riverside Research Advances Agentic AI for National Security

Author(s): Matthew May

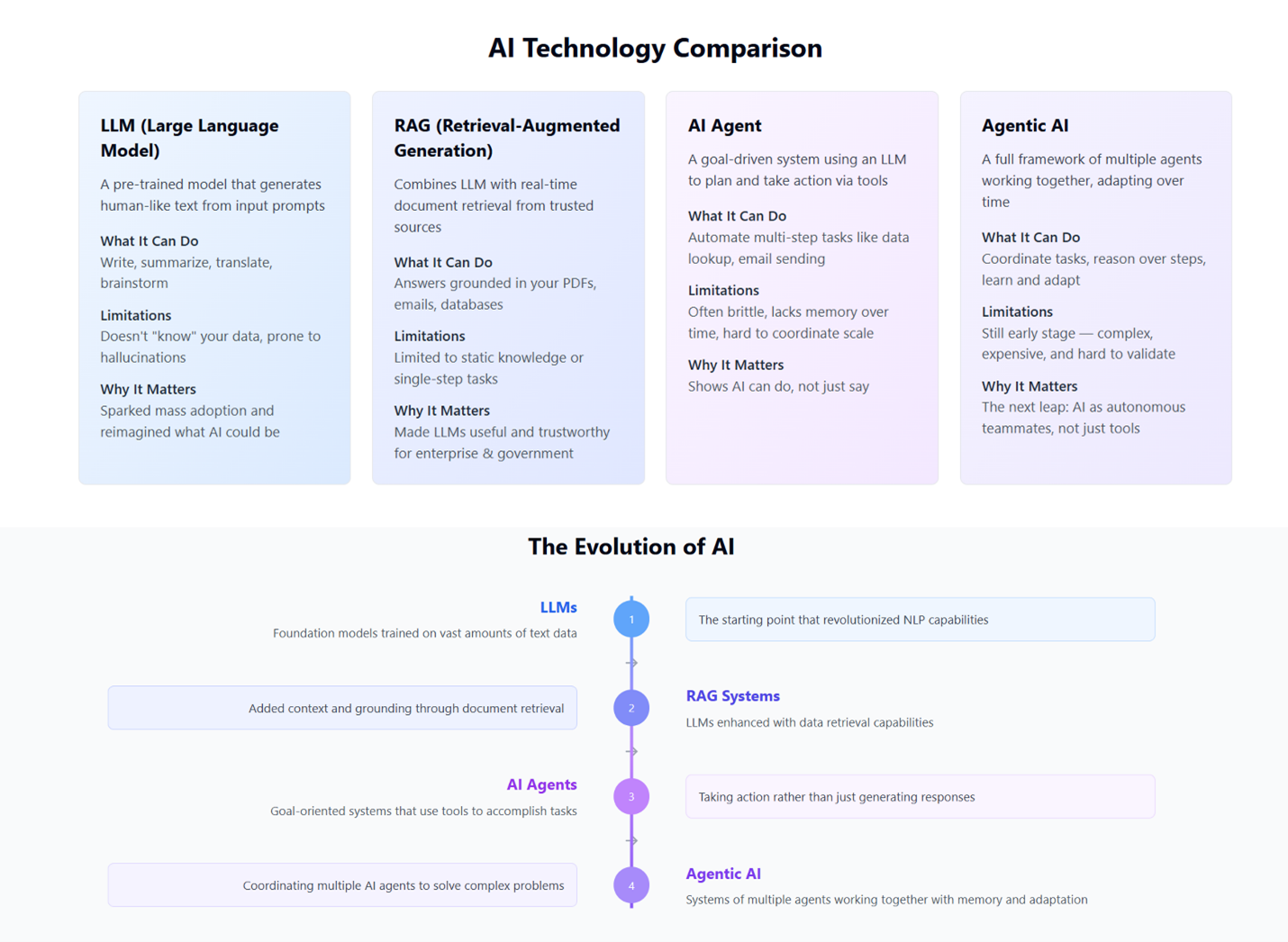

Artificial Intelligence (AI) has evolved rapidly in recent years, moving beyond simple buzzwords to become a transformative force. AI technology has progressed from basic text generation to complex systems capable of planning, acting, and reasoning. This evolution is marked by distinct stages, each introducing new levels of intelligence and autonomy with significant operational relevance for national security.

For many professionals in the national security space, the pace of change can be difficult to follow. Questions like, “What is an ‘AI agent?’,” “How is that different from ChatGPT?,” and “What exactly is ‘agentic AI?’” are common. The following step-by-step examples, grounded in national security, clarify this evolution, showing not just what is possible, but what is already happening.

Stage 1: Large Language Models (LLMs) | The Spark

The mainstream breakthrough for modern AI came in late 2022 with the advent of Large Language Models (LLMs) like ChatGPT. This initial stage can be thought of as using AI to draft a CONOPS, threat summary, or executive briefing. These models demonstrated a remarkable fluency in writing, summarizing, and explaining complex topics based on a clear prompt.

However, LLMs are limited despite their power. They do not take action, access internal systems, or know anything beyond the data they were trained on.

- National Security Example: An analyst asks an early LLM, "What is the difference between EO and SAR imagery?" The model gives a decent explanation but remains unaware of recent operational collection events, priority regions, or how those phenomenologies were applied in recent missions.

Stage 2: Retrieval-Augmented Generation (RAG) | Making AI Operational

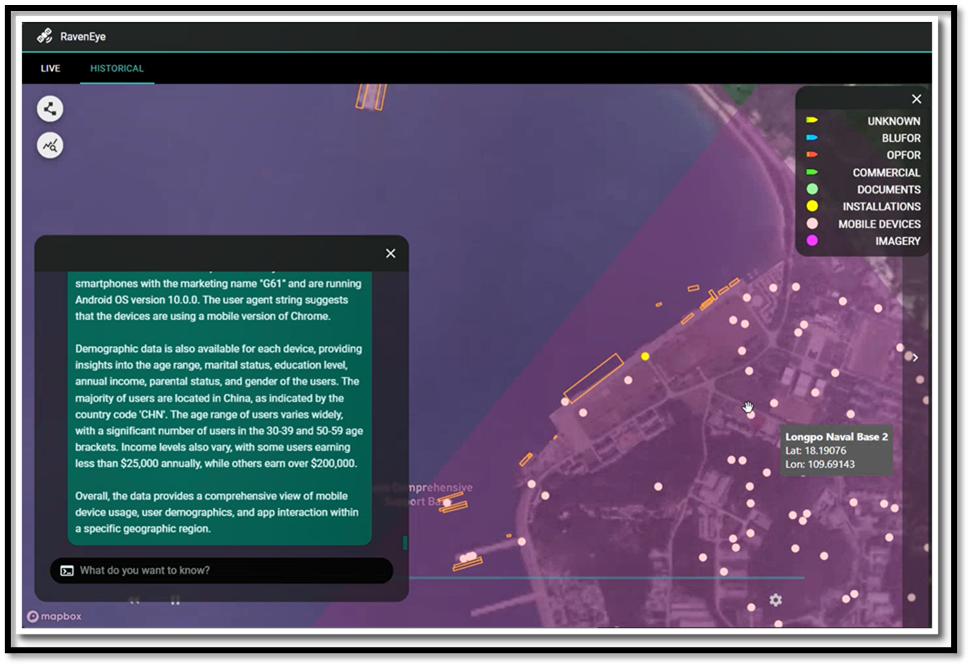

The next stage involves making AI operational by giving it access to timely, relevant data. RAG systems function like a smart assistant that can read intelligence reports, travel logs, or collection briefs before formulating a response. This is achieved by enabling LLMs to access live documents, databases, and internal reports, ensuring answers are based on real-world data, not just generalized training information.

- National Security Example: A user asks, “Where has this vessel operated in the past 72 hours?” An AI agent using RAG can search maritime telemetry feeds, commercial AIS records, and archived satellite imagery indexes. It then returns a plotted route with timestamps, flags deviations from known patterns, and provides source links to each data point. A task that once took a team of analysts hours or days can now happen in under a minute with complete transparency and traceability.

Stage 3: AI Agents | Doing Tactical Tasks

AI Agents represent the shift from just answering questions to actively performing tasks. These agents pair LLMs with toolkits such as APIs, dashboards, and scripting environments, allowing them to take action, complete steps, and prepare outputs, often without additional user input.

- National Security Example: An AI agent is assigned to monitor real-time air traffic feeds across a sensitive airspace corridor. When a high-interest aircraft deviates from its expected flight profile, the agent independently pulls its recent flight history, cross-references relevant airspace alerts, analyzes past behavior patterns, and compiles a summary report for the mission lead, complete with a timeline, trajectory overlay, and risk indicators.

Stage 4: Agentic AI | Working Toward a Mission Goal

Agentic AI introduces a collaborative framework where systems understand intent, create plans, and coordinate tasks among other agents or tools to achieve a mission outcome. Instead of just following instructions, these systems can decompose a high-level goal into sub-tasks and adapt dynamically to changing conditions.

- National Security Example: A user gives the high-level prompt, “Identify active threats targeting U.S. satellite communications infrastructure.” The agentic AI then orchestrates a series of actions:

- Launches a search agent to comb open-source threat intelligence feeds.

- Deploys a pattern-matching agent to analyze historical intrusion logs.

- Flags anomalous beaconing patterns from known threat actor infrastructure.

- Generates a visual map of likely access vectors.

- Produces a draft cyber threat bulletin tailored for a strategic communications audience.

Stage 5: Cognitive Agent Ecosystems | Cross-Domain Learning

This stage involves multi-agent systems that persist across tasks, build a shared memory, and improve over time. These ecosystems can learn user preferences, mission priorities, and operational context across different domains, leading to scaled and optimized intelligence operations.

- National Security Example: A multi-intelligence orchestration system connects to sensor tasking systems. Its agents remember prior requests, which allows them to optimize tasking efficiency over time and surface emerging threats before a human even asks. This capability is used to reduce tasking bottlenecks and prototype persistent custody tracking across areas of interest.

Stage 6: Emergent Multi-Agent Autonomy | Strategic Reasoning at Scale

The current frontier is a phase where decentralized networks of AI agents collaborate, improve themselves, and manage mission workflows at operational tempo. These agents can collectively manage surveillance of key regions, adjust priorities in real-time, and support command-level decision-making. The agents function as a seamless, fully autonomous team.

- National Security Example: To support real-time Intelligence, Surveillance, and Reconnaissance (ISR), an autonomous loop is created:

- One agent classifies radiofrequency (RF) signals over an area of interest.

- A second agent correlates that RF data with electro-optical (EO) detections.

- A third agent triggers satellite re-tasking requests based on the correlation.

- A fourth agent generates mission updates for the Watch Officer.

The Future of AI and National Security

As AI becomes more autonomous and capable of setting goals, orchestrating sensors, and delivering intelligence, the responsibility of its human operators grows with it. Agentic AI is not just about automation; it is about intention. These systems will adapt and collaborate to pursue mission outcomes with increasing autonomy, but humans will always remain in the loop to set objectives, guide actions, and make the final decisions. Governance, transparency, and ethical design are therefore essential to ensure these powerful systems serve national security objectives responsibly.

At Riverside Research, we are using internal R&D to develop agentic AI systems that are scalable, secure, and aligned with operational requirements. The objective is to provide capabilities that support the future warfighter by improving decision speed, situational awareness, and adaptability across all domains. The future of intelligence is not about more data or more tools; it’s about better orchestration, grounded in trust, accountability, and purpose.

Ready to learn more about Riverside Research’s agentic AI solutions? Contact us today.

Featured Riverside Research Author(s)

Matthew May

Matthew May is the Director of Cognitive Intelligence Solutions at Riverside Research, leading advanced research and development in Artificial Intelligence, Multi-INT fusion, and autonomous systems for national security applications. He oversees initiatives spanning Agentic AI applied to the Intelligence Cycle, Modular Autonomous Payloads (MAP), and Dynamic Intelligence Orchestration (DIO), driving innovation across multi-domain data environments for DoD and Intelligence Community customers.

With a background that bridges defense innovation and applied technology, Matthew has led programs supporting multiple intelligence agencies—delivering prototypes that integrate AI agents, sensor data, and real-time situational awareness to accelerate decision-making at the tactical edge.

Prior to Riverside Research, Matthew founded and led multiple technology startups focused on open-source intelligence and analytical automation, including Applied Technology Solutions (ATS), whose software has been leveraged by government and non-profit organizations to combat human trafficking and transnational threats.

His work focuses on fusing human and machine intelligence to advance the future of autonomous decision support and Intelligence-as-a-Service.

LinkedIN

The above listed authors are current or former employees of Riverside Research. Authors affiliated with other institutions are listed on the full paper. It is the responsibility of the author to list material disclosures in each paper, where applicable – they are not listed here. This academic papers directory is published in accordance with federal guidance to make public and available academic research funded by the federal government.